The only constant is change, as the old saying goes. As technological and social change affect how we interact and engage with one another, it’s critical that libraries continually seek information about the evolving expectations of our community. Observations and usage trends provide valuable data that help orient our direction, but sometimes the best way to surface user expectations is to just ask.

At the end of February, the Libraries launched a campus-wide survey to a random sample of students, faculty, and—for the first time—staff. If you happened to be among those who received an invitation and responded, we offer our thanks. If you didn’t get an invite, maybe we’ll catch you next time. The Libraries typically undertake a survey every 2 or 3 years to make sure we’re keeping tabs on how we are doing—it’s a great way to see what’s working well, what we might consider changing and in some cases, what we might stop doing.

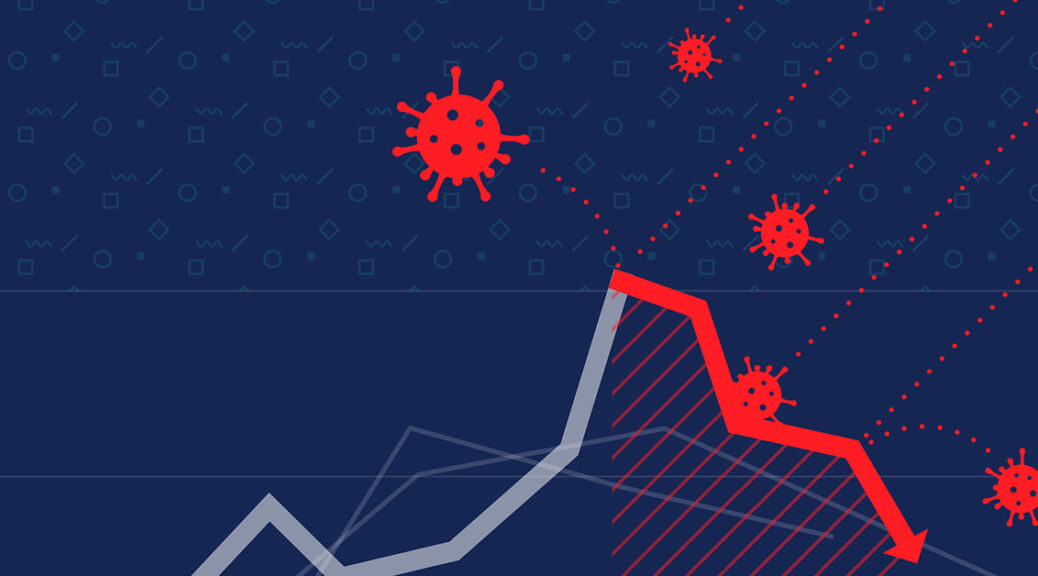

The 2022 survey is particularly important because it is the Libraries’ first survey since prior to the pandemic. The original plan was to implement the survey in fall 2020, but things felt too different, too anomalous at that time to get an accurate picture. Many of us were not on campus, and many of our circumstances were strained or unusual. We wanted the survey to represent longer-term trends—inasmuch as those exist anymore—rather than a snapshot of perceptions during the campus’s most restrictive COVID-related policies. The last time we surveyed the campus was in spring 2018, so there’s an eagerness to see what has changed and what has stayed the same over the last four turbulent years.

For the first time, we developed and wrote our own survey instrument. This has long been a goal of mine, not because there aren’t great survey instruments available to libraries, but because we wanted to be able to tailor our questions to our unique population and circumstances. Past industry-standard instruments that we’ve used such as those created by LibQUAL and Ithaka S+R allowed us to compare trends at UT to national trends—which is a valuable exercise—but this time we wanted to focus on the perceptions, experiences, and needs of the Longhorn community. With support from folks around campus (especially the staff in IRRIS) and a seasoned assessment team, we took on the challenge of writing, administering, and analyzing our own survey, designed to provide us with actionable feedback.

In the coming months, we’ll be using this space to share some of the findings from the survey as we work through our analysis, and eventually, we will have full results to share with the public. Topics that you can expect to see addressed here include longitudinal insights (i.e., where we see trends and perceptions evolving over the long term), spotlights on insights gleaned about different demographic groups, and other interesting tidbits. We won’t just tell you what we find interesting, though—we want to highlight what we’re doing with the results. Expect to read about areas that we want to investigate further with focus groups and interviews, and changes that we’re putting in place based on what we learn.

A sneak peak of a survey item focused on satisfaction shows that for the most part, folks are pretty happy with us…in particular, happy with the Libraries’ services. But there is more still to be learned from a thorough review of the data:

- Does that hold true across all demographic groups?

- What can we learn about those who aren’t satisfied?

These are the kinds of questions we’ll be asking ourselves and our users, as we sift through the results. We invite you participate in an ongoing exploration of the data we’ve collected so that we can learn even more from the process of analysis as we seek to improve the work of these Libraries.

The Libraries’ roadmap for success depends largely on hearing both the praise and criticism of our users, so take an opportunity to help improve your UT Libraries by providing your own input, feedback and observations as we plan together for the best possible future.